How to automate NSFW image detection with Uploadcare

Last edited:

When building applications where users can upload content, you often have little to no control over what images your users upload.

Even with some of the restrictions you could add on what files your users should upload, sometimes individuals may upload inappropriate, NSFW or offensive images.

Filtering these contents manually can be tedious and time-consuming, but Uploadcare can help you filter these contents automatically, saving you time and effort in moderating uploaded content.

In this article, we will go over how Uploadcare can help you to not only detect and identify NSFW images uploaded into your projects, but can also automatically filter these inappropriate images from being published.

Why NSFW filters are important

Having a NSFW filter in your project is important because it:

- Safeguards your brand reputation: Allowing inappropriate content in your applications can harm your brand’s reputation — users can speak negatively about your brand because someone has explicit content on your platform. Implementing a NSFW filter helps to protect your platform’s image and foster a positive perception among your users and partners

- Protects your users from harmful content: Having a NSFW filter helps to prevent the display of inappropriate or harmful content, such as explicit, violent, or offensive imagery, ensuring a safer environment for your users

- Helps with legal compliance and ethical standards: Many regions and countries have very strict regulations around displaying explicit or harmful content, especially to minors. Having a filter ensures your platform complies with these laws, reducing legal risks and maintaining ethical standards.

- Creates an inclusive and safe space: When you filter NSFW content on your platform, you promote inclusivity by protecting sensitive users and vulnerable communities from exposure to triggering and offensive imagery, fostering a safe and welcoming environment for everyone.

- Enhances the user experience of your platform: By filtering out unwanted and offensive content from your platform, you can provide a more pleasant and professional experience for your users, encouraging better user trust and engagement.

How to use Uploadcare to automatically filter NSFW content

Uploadcare helps you filter content from your project automatically. It utilizes Amazon Rekognition to scan uploaded files for inappropriate or NSFW content.

This is possible with our Rest API add-on endpoint, which uses Amazon Rekognition to automatically identify and flag such content by analyzing the image and comparing it to the list of AWS moderation labels.

Automatically filtering content using the API works in two ways.

First, you send a request to the Uploadcare Rest API add-on, passing the UUID of the file you want the API to check;

the API then updates the appdata field of the file to show if the file is safe or not.

With this information updated on the appdata of the file,

you can then use a webhook from Uploadcare to check when the file’s info is updated to either remove the file from your project

or do whatever you want with the file. Let’s see this in action.

Using the Uploadcare Rest API add-on

First, let’s send a request to the API addon endpoint:

// Replace these constants with your data

const YOUR_PUBLIC_KEY = 'your_public_key';

const YOUR_SECRET_KEY = 'your_secret_key';

const UUID = 'your_file_uuid';

fetch(

'https://api.uploadcare.com/addons/aws_rekognition_detect_moderation_labels/execute/',

{

method: 'POST',

headers: {

'Content-Type': 'application/json',

'Accept': 'application/vnd.uploadcare-v0.7+json',

'Authorization': `Uploadcare.Simple ${YOUR_PUBLIC_KEY}:${YOUR_SECRET_KEY}`

},

body: JSON.stringify({ target: UUID })

}

)

.then(response => response.json())

.then(data => console.log('Success:', data))

.catch(error => console.error('Error:', error));The code above performs the following:

-

Sends a request to the endpoint using the

UUIDof the file you want to analyze. -

When the server gets the request, it uses Amazon Rekognition to analyze the image if it’s appropriate.

-

The server updates the

appdataof that file to indicate its status, whether it’s inappropriate or contains NSFW content. -

Returns a

request_idof the request sent:{ "request_id": "6aa7ec2d-809a-4c13-8a85-382c9134bfa3" }

Using the request ID, you can check the status of the analysis to know if the request is done or pending:

fetch(

`https://api.uploadcare.com/addons/aws_rekognition_detect_moderation_labels/execute/status/?request_id=${REQUEST_ID}`,

{

method: 'GET',

headers: {

'Content-Type': 'application/json',

'Accept': 'application/vnd.uploadcare-v0.7+json',

'Authorization': `Uploadcare.Simple ${YOUR_PUBLIC_KEY}:${YOUR_SECRET_KEY}`

}

}

)

.then(response => response.json())

.then(data => console.log('Success:', data))

.catch(error => console.error('Error:', error));The response of this request will return a status with value of either in_progress, error, done or unknown:

{

"status": "done"

}When the API request returns a status of done, you can then request the file info,

which will contain an appdata field indicating whether the file contains inappropriate content to display.

You can do this by adding ?include=appdata to the API endpoint when fetching a file from Uploadcare:

fetch(

`https://api.uploadcare.com/files/${UUID}/?include=appdata`,

{

method: 'GET',

headers: {

'Accept': 'application/vnd.uploadcare-v0.7+json',

'Authorization': `Uploadcare.Simple ${YOUR_PUBLIC_KEY}:${YOUR_SECRET_KEY}`

}

}

)

.then(response => response.json())

.then(data => console.log('Response data:', data))

.catch(error => console.error('Error:', error));This will provide you with a file info object and inside of the object, you’ll find the appdata field:

{

"appdata": {

"aws_rekognition_detect_moderation_labels": {

"data": {

"ModerationLabels": [

{

"Name": "Weapons",

"Confidence": 99.96800231933594,

"ParentName": "Violence"

},

{

"Name": "Violence",

"Confidence": 99.96800231933594,

"ParentName": ""

}

],

"ModerationModelVersion": "7.0"

},

"datetime_created": "2025-01-06T22:36:34.833095Z",

"datetime_updated": "2025-01-06T22:36:34.833123Z",

"version": "2016-06-27"

}

}

}Another way to get file info when Amazon Rekognition has scanned the file is to listen for file changes using Uploadcare webhooks. When something changes in the file, the webhook will be fired. Let’s look at this in the next section of this article.

Note: The add-on API endpoint is also available as SDKs in various languages such as PHP, Python, Ruby, Swift, and Kotlin. For their Implementation, please visit the API reference.

Listening for changes in file appdata using Uploadcare hooks

Uploadcare provides webhooks that you could use to listen for changes relating to the files in your project.

Examples of these webhooks include hooks such as file.uploaded for when a file is uploaded and file.info_updated

for when information about a file is updated.

To ensure that the whole NSFW filter process is automated,

let’s use the webhook file.info_updated to listen for when the appdata of that file is updated

and perform specific actions regarding that file.

To use a webhook, you first need to enable it on your project from the Webhooks Settings page. Then, click on the “Add Webhook” button to add a new webhook.

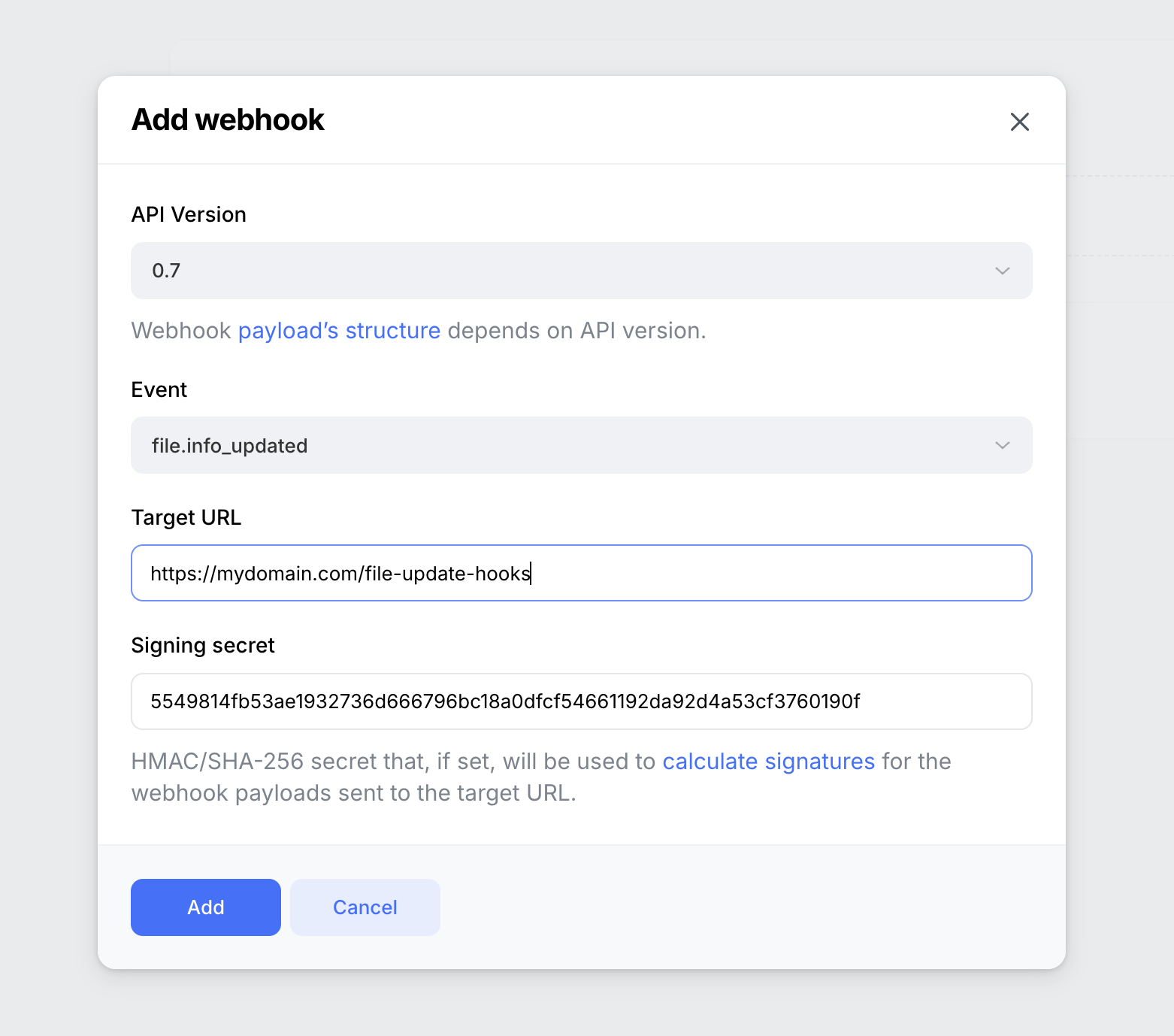

This will open up a modal to fill in the details for the webhook you want to create:

Uploadcare Webhook set up modal

Uploadcare Webhook set up modalWhere:

-

Event should be

file.info_updatedto listen for updated changes. -

The target URL should be the URL where you want to listen for the hook event.

-

Signing secret is a unique secret hash generated by you for extra security when Uploadcare interacts with your server.

When a change in the file’s data occurs, the hook will return data about the file like this:

{

"initiator": {

"type": "addon",

"detail": {

"addon_name": "aws_rekognition_detect_moderation_labels",

"request_id": null

}

},

"hook": {

"id": 2657138,

"project_id": 100104,

"project_pub_key": "80000ef90123333b1469",

"target": "https://03b2-102-88-43-210.ngrok-free.app/api/hooks",

"event": "file.info_updated",

"is_active": true,

"version": "0.7",

"created_at": "2025-01-08T10:29:46.768409Z",

"updated_at": "2025-01-08T10:34:35.452479Z"

},

"data": {

"content_info": {

"mime": {

"mime": "image/jpeg",

"type": "image",

"subtype": "jpeg"

},

"image": {

"color_mode": "RGB",

"format": "JPEG",

"height": 2449,

"width": 2449,

"orientation": null,

"dpi": [

72,

72

],

"geo_location": null,

"datetime_original": null,

"sequence": false

}

},

"uuid": "3fae784a-6df6-4826-a93e-4082edb29da3",

"is_image": true,

"is_ready": true,

"metadata": {},

"appdata": {

"aws_rekognition_detect_moderation_labels": {

"data": {

"ModerationLabels": [

{

"Confidence": 99.96800231933594,

"Name": "Weapons",

"ParentName": "Violence"

},

{

"Confidence": 99.96800231933594,

"Name": "Violence",

"ParentName": ""

}

],

"ModerationModelVersion": "7.0"

},

"version": "2016-06-27",

"datetime_created": "2025-01-06T22:36:34.833Z",

"datetime_updated": "2025-01-08T10:58:07.32Z"

},

"uc_clamav_virus_scan": {

"data": {

"infected": false

},

"version": "1.0.1",

"datetime_created": "2025-01-06T19:46:52.477Z",

"datetime_updated": "2025-01-06T19:46:52.477Z"

}

},

"mime_type": "image/jpeg",

"original_filename": "unsafe.jpg",

"original_file_url": "https://6ca2u7ybx5.ucarecd.net/3fae784a-6df6-4826-a93e-4082edb29da3/unsafe.jpg",

"datetime_removed": null,

"size": 1606397,

"datetime_stored": "2025-01-06T19:46:52.436724Z",

"url": "https://api.uploadcare.com/files/3fae784a-6df6-4826-a93e-4082edb29da3/",

"datetime_uploaded": "2025-01-06T19:46:52.2539Z",

"variations": null

},

"file": "https://6ca2u7ybx5.ucarecd.net/3fae784a-6df6-4826-a93e-4082edb29da3/unsafe.jpg"

}With the details about the file provided, you can choose to remove the file using our Rest API or even blur out the whole or certain parts of the file using our Transformation API

Conclusion

In this article, you learned how to use Uploadcare’s Rest API add-on to detect NSFW content, listen for file changes, and filter out files with inappropriate content using a webhook.

Want to learn about some more features from Uploadcare? Check out our guide on Malware detection and built-in and custom validators to help you validate files before and after uploading.